Intro: The Physics of Artificial Intelligence

AI has taken the world by storm, capturing headlines, investor capital, and public imagination. But behind the interfaces and rapid responses lies an overlooked fact: AI is infrastructure at scale.

Every query to a model like ChatGPT kicks off billions of computations powered by vast clusters of GPUs, chips, and energy-hungry data centers. As models grow larger and smarter, their physical demands have exploded. And yet today’s best AI systems are still vastly less efficient than the human brain, which runs on just 20 watts—less than a household light bulb.

Since the Transformer breakthrough in 2017, model scale has grown exponentially. GPT-3 used 175 billion parameters; GPT-4 is estimated to exceed a trillion. Each breakthrough requires orders-of-magnitude more compute, meaning: more hardware, more power, more capital investment.

In this article, we unpack the physical infrastructure behind AI: chips, cooling systems, energy grids and fiber networks. For anyone building, investing in, or scaling AI, understanding this physical foundation is no longer optional. It’s the key to where AI is headed next.

The Body – Breaking Down the Anatomy

1. Computation: Chips & Compute Hardware

At the core of modern AI systems lies an intense amount of computation, executed by specialized chips designed to perform billions of mathematical operations per second, enabling neural networks to detect patterns across massive datasets from words, pixels, proteins and even sensor data.

Unlike general-purpose CPUs, AI chips are built for parallel processing: executing thousands of tasks simultaneously. This architecture makes large language models possible and it has shifted the entire industry towards three dominant categories of processors:

- GPUs (Graphics Processing Units) – Originally built for graphics, now the dominant force in AI due to their ability to process large-scale matrix operations in parallel; widely used, highly versatile, and led by Nvidia’s H100 and AMD’s MI300.

- TPUs (Tensor Processing Units) – Google’s custom-built chips designed specifically for AI; optimized for both training and inference using efficient systolic arrays, but limited to Google’s ecosystem.

- AI-First Chips – Built from the ground up for machine learning by startups like Cerebras and Graphcore; focus on minimizing data bottlenecks and maximizing efficiency at scale, though still early in ecosystem support.

As models scale, the industry is running into a wall: chip supply can’t keep up. A single Nvidia H100 can cost over $40,000, and training a frontier model requires thousands. Meanwhile, U.S. export restrictions have tightened chip flows to China, adding geopolitical urgency to the mix.

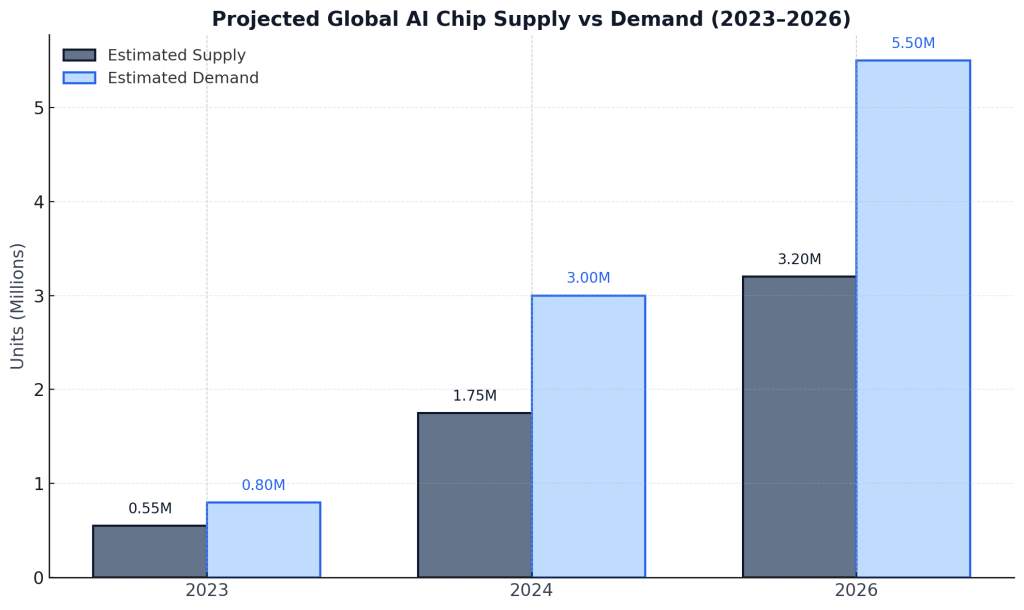

- In 2023, the AI chip market was valued at $53 billion. It’s expected to double by 2026, with implications for everything from venture funding to national policy.

Demand for AI chips is outpacing supply with shortages expected to persist through 2026 despite rapid production growth. Sources: FT, McKinsey, Wells Fargo, TrendForce (2023–2024); projections extrapolated using ~30–40% CAGR in AI chip demand and ~25–30% CAGR in supply growth.

2. Infrastructure: The Data Centers

The rise of large models has triggered a global buildout of data centers, designed to house tens of thousands of GPUs and consume megawatts of power required for massive computation for frontier AI models.

These centers are defined by:

- Density – Thousands of chips packed into tight racks.

- Power – Each facility draws megawatts, often as much as a small city.

- Cooling – Liquid and immersion cooling to keep machines from melting.

- Speed – Real-time data movement at terabit-per-second speeds.

As demand has surged, tech companies have been investing aggressively: Microsoft announced plans to spend $80 billion on AI data centers in 2025 alone, a 10x increase from its annual data center budget just five years ago. Amazon and Meta are building new facilities like Project Rainier and a $10B AI supercomputing hub in Louisiana.

But the response is not only private, as governments have entered the race:

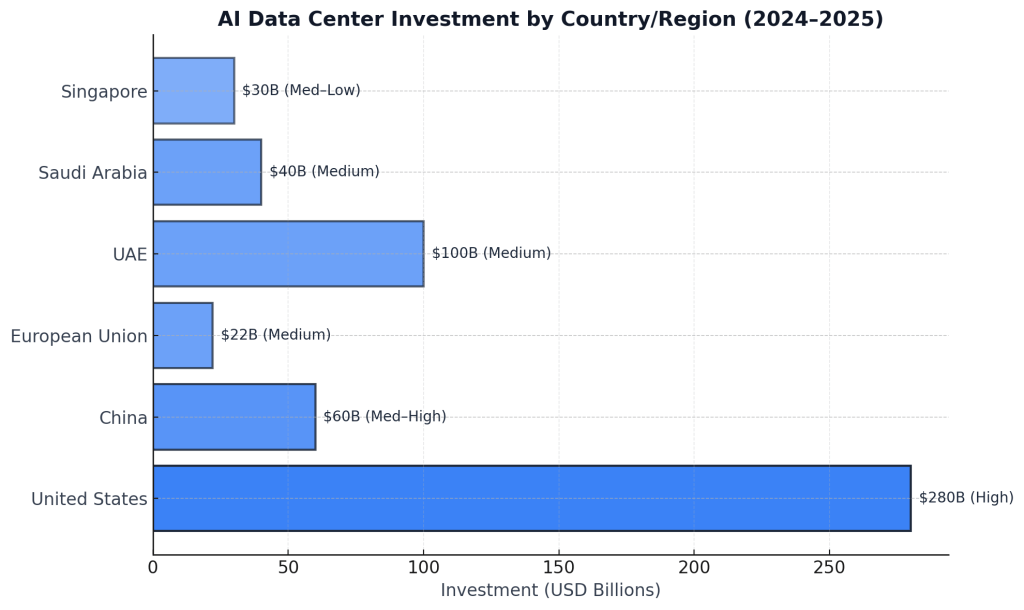

- The United States is leading with sheer capital scale over $280 billion projected in AI data center investment, supported by private sector dominance and hyperscaler muscle.

- The UAE and Saudi Arabia are using state-backed capital and cheap energy to become global compute hubs

- China, despite export restrictions, is aggressively scaling domestic AI infrastructure pairing state subsidies with internal chip innovation.

- Singapore and the EU are taking a more measured approach constrained by space, energy pricing, and regulation but investing strategically in sustainable, high-efficiency builds, and sovereign compute initiatives.

Meanwhile, physical limits are becoming apparent: in Northern Virginia, the world’s largest data center hub, grid connection wait times have reached 7 years: a sign of enormous infrastructural strain.

The United States leads global investment in AI infrastructure, followed by China and emerging digital powers like the UAE and Saudi Arabia. Confidence levels (in parenthesis) reflect the strength of available data, from confirmed hyperscaler CapEx to reported strategic partnerships and public initiatives. Sources: FT, Bloomberg, McKinsey, TrendForce, local government disclosures, company announcements (2023–2024).

3. Fuel: AI’s Energy Shockwave

Besides being computationally intensive, AI is also incredibly energy-hungry. Queries, inference, and training runs rely on enormous electricity inputs, and the growth of model complexity is closely followed by the energy footprint.

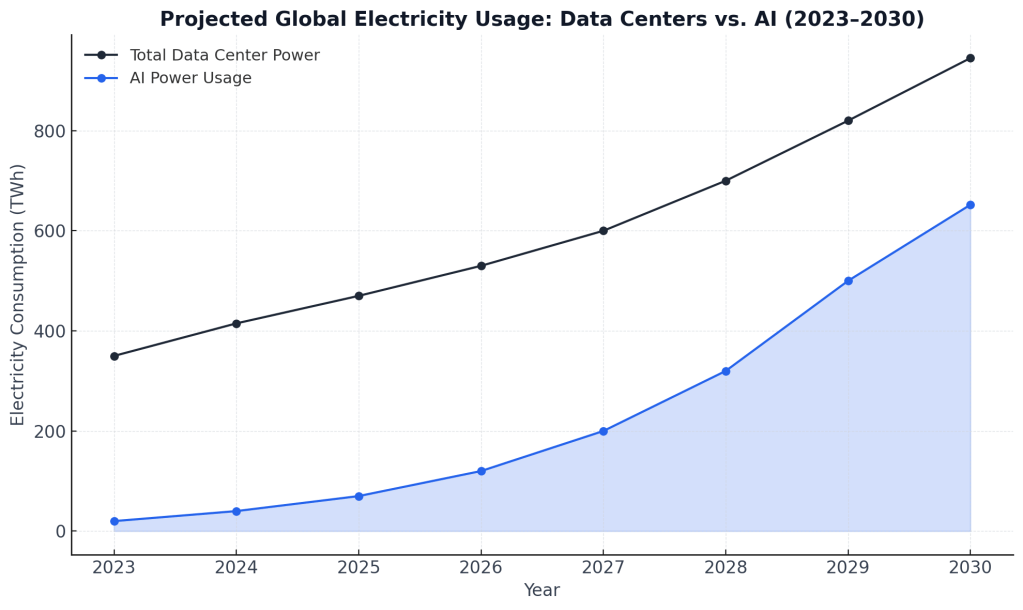

In 2024, data centers consumed an estimated 415 terawatt-hours (TWh) roughly 1.5% of global electricity usage. That’s set to more than double by 2030, largely due to AI workloads. Put into perspective, that’s more than Germany’s entire yearly electricity demand.

However, this growth isn’t just from training massive models, as AI inference (the everyday operations of ChatGPT, Claude, etc) takes central stage, it is expected to outpace training-related energy use by 2027. As shown below, AI-specific electricity use could exceed 650 TWh by 2030, surpassing the total consumption of many countries today.

Electricity use by data centers is set to more than double by 2030, with AI expected to consume over 650 TWh more than many countries use today. Sources: IEA (2024), McKinsey (2023), Forbes/Wells Fargo projections (2024).

This energy surge has started reshaping the big tech and the energy production of major countries. 3 key trends are notable:

- Nuclear Revival – Tech giants are turning to nuclear: Amazon acquired a nuclear-powered data center in Pennsylvania, and Microsoft may reopen Three Mile Island.

- Geopolitical Friction – U.S. operators warn that anti-renewable policies could stall AI growth and raise costs, while countries like Finland, Quebec, and Brazil promote themselves as low-carbon AI hubs.

- Environmental Cost – AI’s carbon footprint reached 180 million tons in 2025 and could hit 500 million by 2035 if demand keeps surging.

The issue of resource consumption by AI deserves focused attention and discussion, which will be explored further in a future article. The future impact of their growing energy and water usage remains uncertain, especially if data center expansion continues as projected.

4. Flow: Memory, Bandwidth, and Bottlenecks

Finally, besides computation and energy, training AI models is dependable on the speed of the data movement. As model sizes exploded, data movement has become the most underestimated constraint in AI infrastructure. Modern models process petabytes of data, and as they scale, the real challenge becomes moving that data without delay.

To keep up, the industry relies on three key components:

- High-Bandwidth Memory (HBM) – Custom-built memory stacked directly on top of chips, giving GPUs real-time access to training data up to 10x faster than conventional RAM.

- Ultra-Fast Networking – Technologies like InfiniBand and NVLink allow GPUs to communicate at terabit-per-second speeds, essential for synchronizing model updates across thousands of chips.

- Private Fiber Infrastructure – Tech giants like Microsoft and Google are laying their own undersea cables to move data globally with minimal latency and full control.

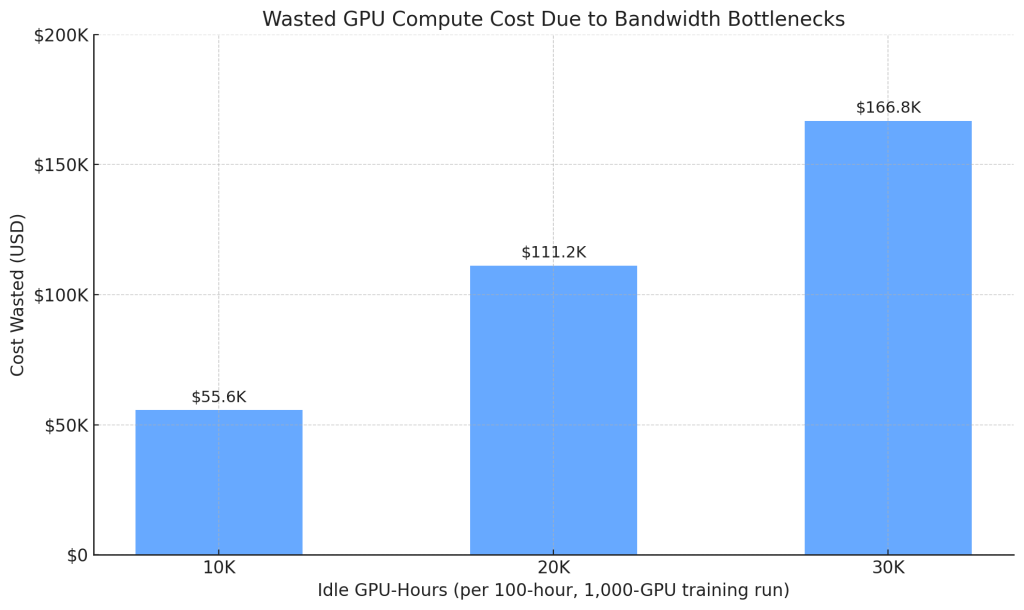

And yet even with all of this, AI systems hit a wall. A top-tier GPU like Nvidia’s H100 is capable of 3.6 terabits per second, but real-world setups often fall short. In large clusters, up to 30% of training time is lost to bandwidth or memory delays. That means millions of dollars in wasted compute because the data can’t keep up.

Memory and networking delays during AI training can waste tens of thousands of GPU-hours even 10–30% idle time can cost over $160K per run.

Sources: AWS EC2 H100 pricing (2024), CoreWeave, Runpod, Lambda Labs, author estimates.

The Invisible Engine Behind AI

Behind the immaterial interface and a response that lacks the human touch, AI is created through a vast and growing infrastructure of chips, data centers, national energy grids, and fiber infrastructure. As AI grows in its capability and gets embodied, this embodiment will become physical, political and capital intensive.

This is not just a story of software, but a story of infrastructure, capital allocation, and geopolitical strategy. As compute becomes a lever of economic power, the foundations of AI will reshape industries and entire national agendas.

In future pieces, we’ll explore how this infrastructure buildout intersects with supply chains, energy politics, semiconductor strategy, and the next generation of frontier models. Because the future of AI will be decided by the systems that make the code possible in the first place.

And we’re just getting started.

Sources: Data and estimates cited throughout this article are drawn from a range of public and proprietary reports, including: McKinsey, Bloomberg, International Energy Agency (IEA), Financial Times, White House AI Report, NVIDIA, Lamda Labs, Cowen Research, and author calculations.

Leave a comment